Date: 06 March 2013

Date: 06 March 2013

Time: 13.00-14.00

Place: tba

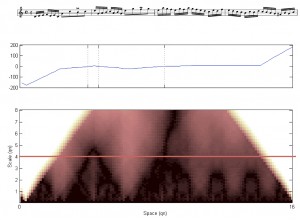

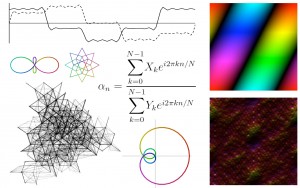

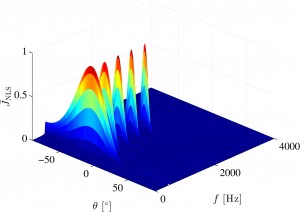

The direction-of-arrival (DOA) and the pitch of multichannel, periodic sources are some of the main paraphernalia in many of signal processing methods for, e.g., tracking, separation, enhancement, and compression. Traditionally, the estimation of these parameters have been considered as two separate problems. Separate estimation may render the task of resolving sources with similar DOA or pitch impossible, and it may decrease the estimation accuracy. Therefore, it was recently considered to estimate the DOA and pitch jointly. In this talk, we present two novel methods for DOA and pitch estimation. They both yield maximum-likelihood estimates in white Gaussian noise scenarios, where the signal-to-noise (SNR) may be different across channels, as opposed to state-of-the-art methods. The first method is a joint estimator, whereas the latter use a cascaded approach, but with a much lower computational complexity. The simulation results confirm that the proposed methods outperform state-of-the-art methods in terms of estimation accuracy in both synthetic and real-life signal scenarios.

Bio

Jesper Rindom Jensen received the M.Sc. and Ph.D. degrees from the Deptartment of Electronic Systems at Aalborg University in 2009 and 2012, respectively. Currently, he is a postdoctoral researcher at the Department of Architecture, Design & Media Technology at Aalborg University. His research interests include spectral analysis, estimation theory and microphone array signal processing.